How I integrated Google Analytics to display visitors with Ghost using Azure Services

Learn how to track and visualize Ghost blog visitors by combining Google Analytics insights with Azure’s cloud tools

You likely searched the internet for how you can display the number of page visitors in your Ghost theme, only to get disappointed.

I encountered the same issue, and as stubborn as I was, I wanted to give it a shot. Because I developed my own Ghost theme, I quickly found myself in a rabbit hole. But for each hole you dig, you eventually emerge from it.

In this blog post, I'll teach you how you can integrate Google Analytics to display the number of visitors every month using Azure Services. I'm assuming you already have a Ghost theme development setup, have an active Azure subscription, and have enabled the basic Google Analytics integration.

Create a Google Service Account

Integrating with Google Analytics and sending data is easy. Retrieving it is not so much. Google's Analytics Data API didn't allow for any API key (anymore). That's where I needed a new method to fetch data: using a Google Service Account.

This method allows you to limit the privileges only to return the data you need.

The following steps guided me to create a Google Service Account:

- Navigate to https://console.cloud.google.com/.

- Sign in with your Google account.

- Create a "New project" or select an existing one.

- Click "Select a project" dropdown at the top.

- Click "New project".

- Name:

ghost-theme-analytics. - Click "Create".

With the project created, it was time to enable the Google Analytics Data API:

- In the Google Console main page, click the "APIs & Services" in the Quick access menu.

- In the left corner, click "Library" and search for Google Analytics Data API.

- Click "Enable".

The Google Analytics Data API is now enabled, allowing access to report data from Google Analytics. It was time to create the account:

- Under the same page (APIs & Services), click the "Credentials" tab.

- Click the "Manage service accounts", bringing you to IAM & Admin -> Service accounts.

- Click the "Create service account" button.

- Give the Service account a name.

- Optionally, give it a description.

- Click "Finish" to create the account.

Before jumping to the next section, I already wanted to populate the key details:

- Click on the just-created Google Service Account.

- Click the "Keys" tab.

- Add key -> Create new key.

- Select "JSON" and click "Create".

When the JSON was created, a JSON file got downloaded to my desktop which is needed for later.

Configure Google Analytics access

With a successful Google Service Account created, it can now be added with limited privileges to Google Analytics:

-

Open the downloaded JSON file.

-

Find the

client_emailfield. It should look like:"client_email": "ghost-analytics-reader@your-project.iam.gserviceaccount.com" -

Copy the e-mail address.

-

Sign in with your account if not already.

-

Click the "Admin" wheel in the left corner.

-

Collapse the property settings and select "Property user management".

-

Click the "+" button in the right corner and click Add user.

-

Paste the e-mail address and select the Viewer role.

-

Uncheck "Notify new users by email".

The Google Service Account now has access to read report data. I already got the PROPERTY-ID from the Property details blade (it's a number like 123456789) that is needed for the next step.

Setup Azure resources to store page visitors

If you've tried integrating Google Analytics data already, you know that exposing the private key stored in the JSON is a bad thing, especially at the client-side. This was my initial approach, and after reconsidering and following the advice shared on Ghost's forum, I wanted a minimal setup that could daily fetch the number of visitors on a backbone.

That backbone is Azure. There are many resources you can use, but the option I chose was an Azure Storage Account and an Automation Account. The Automation Account supports scheduling jobs, which run a small piece of logic to query the data from Google Analytics. With the account details present, I was able to inject those details into my script safely. Let's see it in more detail.

-

Create a new file named

main.bicep. -

Start with the bare minimum to deploy a Azure Resource Group:

targetScope = 'subscription' // Parameters @description('Name of the resource group') param resourceGroupName string = '<resourceGroupName>' @description('Azure region for all resources') param location string = 'westeurope' @description('Azure automation account name') param automationAccountName string = '<automationAccountName>' @description('Storage account name') param storageAccountName string = '<storageAccountName>' module rg 'br/public:avm/res/resources/resource-group:0.4.1' = { params: { name: resourceGroupName location: location } } -

Add the Automation Account module after the

rgmodule:module aa 'br/public:avm/res/automation/automation-account:0.16.1' = { scope: resourceGroup(resourceGroupName) params: { name: automationAccountName skuName: 'Free' location: location managedIdentities: { systemAssigned: true } runbooks: [ { description: 'Runbook to fetch Google Analytics data' name: 'Set-GoogleAnalyticsDataVisitorStats' type: 'PowerShell72' version: '1.0.0.0' uri: 'https://raw.githubusercontent.com/Gijsreyn/random-tools-gijs/refs/heads/main/powershell/scripts/Ghost/Set-GoogleAnalyticsDataVisitorStats.ps1' } ] variables: [ { name: 'GA4PropertyId' value: '' // Replace with your actual GA4 Property ID copied earlier isEncrypted: false } { name: 'GoogleAccessJson' isEncrypted: true value: loadTextContent('GoogleAccess.json') } ] jobSchedules: [ { runbookName: 'Set-GoogleAnalyticsDataVisitorStats' scheduleName: 'DailySchedule' } ] schedules: [ { advancedSchedule: {} expiryTime: '9999-12-31T13:00' frequency: 'Day' interval: 1 name: 'DailySchedule' startTime: '' timeZone: 'Europe/Berlin' } ] } } -

Store the saved JSON file (looks like

snappy-run-<identifier<.json>) and re-named it toGoogleAccess.jsonin the root wheremain.bicepis located. -

Create an Azure Storage Account and assign the Reader role to the Managed Identity from the Automation Account:

module st 'br/public:avm/res/storage/storage-account:0.26.2' = { scope: resourceGroup(resourceGroupName) params: { name: storageAccountName location: location skuName: 'Standard_LRS' kind: 'StorageV2' allowBlobPublicAccess: true publicNetworkAccess: 'Enabled' networkAcls: {} blobServices: { containers: [ { name: 'analytics' publicAccess: 'None' roleAssignments: [ { principalId: aa.outputs.?systemAssignedMIPrincipalId roleDefinitionIdOrName: 'Storage Blob Data Contributor' principalType: 'ServicePrincipal' } ] } ] corsRules: [ { allowedOrigins: [ 'https://<domainName>' ] allowedMethods: [ 'GET' 'OPTIONS' ] maxAgeInSeconds: 3600 allowedHeaders: [ '*' ] exposedHeaders: [ '*' ] } ] } roleAssignments: [ { principalId: aa.outputs.?systemAssignedMIPrincipalId! roleDefinitionIdOrName: 'Storage Account Key Operator Service Role' principalType: 'ServicePrincipal' } ] } } module readAssign 'br/public:avm/res/authorization/role-assignment/rg-scope:0.1.0' = { scope: resourceGroup(resourceGroupName) name: 'read-assign-deployment' params: { roleDefinitionIdOrName: 'Reader' principalId: aa.outputs.?systemAssignedMIPrincipalId! principalType: 'ServicePrincipal' } dependsOn: [ rg ] }The

<domainName>is the site named.

The Bicep template can be deployed using New-AzDeployment or az deployment sub commands. The Set-GoogleAnalyticsDataVisitorStats.ps1 script is responsible for authenticating using the GoogleAccess.json file and leverages the following query to get the last month's data:

$body = @{

dateRanges = @(

@{

startDate = $StartDate

endDate = $EndDate

}

)

metrics = @(

@{

name = "activeUsers"

}

)

} | ConvertTo-Json -Depth 10

Each day, the script will run to gather the data and store a file called activeUsers.txt in the Azure Storage container using the System-Assigned Managed Identity. Inside the script, this identity is authenticated and can push the file to Blob Storage.

Understanding the JavaScript integration

The Ghost theme that you're developing requires JavaScript code. This piece of JavaScript automatically fetches the visit statistics from Azure Blob Storage and displays the information on your website. Here is how it works:

- First, the Azure Automation Account triggers the PowerShell script.

- The script retrieves data from Google Analytics using the Google Service Account.

- Data is sent to Azure Blob Storage (

activeUsers.txt) in a container calledanalytics. - Website runs JavaScript code based on a SAS Blob URI property defined in the

package.json.

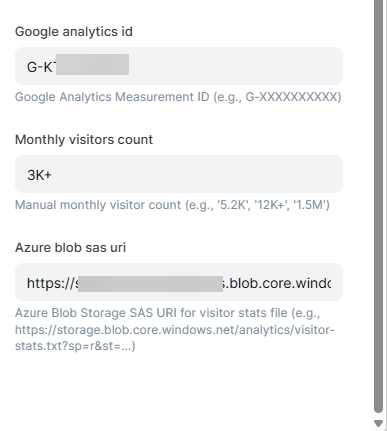

The following is required to be set in the package.json file:

"config": {

// other config settings

"monthly_visitors_count": {

"type": "text",

"default": "5K+",

"description": "Manual monthly visitor count (e.g., '5.2K', '12K+', '1.5M')"

},

"azure_blob_sas_uri": {

"type": "text",

"default": "",

"description": "Azure Blob Storage SAS URI for visitor stats file (e.g., https://storage.blob.core.windows.net/analytics/visitor-stats.txt?sp=r&st=...)"

}

}

Now the magic part. In my assets folder, I already created the famously known js directory with a file named theme.js. Here, the following code can be added to fetch the visitor data from the blob based on a configuration value:

// Fetch visitor statistics from Azure Blob Storage

async function fetchVisitorDataFromBlob() {

// Get SAS URI from HTML data attribute

const sasUri = document.body.getAttribute('data-azure-blob-sas');

if (!sasUri || !sasUri.trim()) {

return null; // No SAS URI configured

}

try {

// Fetch data from blob storage

const response = await fetch(sasUri, {

method: 'GET',

mode: 'cors',

headers: {

'Cache-Control': 'no-cache'

}

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

const text = await response.text();

const visitorCount = text.trim();

// Validate response is a valid number

if (visitorCount && !isNaN(visitorCount) && parseInt(visitorCount) > 0) {

return visitorCount;

} else {

return null;

}

} catch (error) {

// Handle CORS and other errors

if (error.message.includes('CORS') || error.name === 'TypeError') {

console.warn('CORS error: Please configure CORS on your Azure Storage Account for localhost:2368');

} else {

console.warn('Failed to fetch visitor data from Azure Blob Storage:', error.message);

}

return null;

}

}

async function initGoogleAnalyticsStats() {

const statsElement = document.getElementById('monthly-visitors');

if (!statsElement) return;

// Try Azure Blob Storage first

const blobVisitorCount = await fetchVisitorDataFromBlob();

if (blobVisitorCount) {

statsElement.textContent = blobVisitorCount;

return;

}

// Fallback to manual count from theme settings

const manualCount = document.body.getAttribute('data-monthly-visitors');

if (manualCount && manualCount.trim() && manualCount !== '') {

statsElement.textContent = manualCount;

return;

}

// Final fallback

statsElement.textContent = '5K+';

}

The JavaScript looks for an HTML element with id="monthly-visitors" to display the count. In my theme home page, I simply added the following:

<div class="stat-item">

<span class="stat-number" id="monthly-visitors">Loading...</span>

<span class="stat-label">Monthly visitors</span>

</div>

From my default.hbs file, I load in the configuration settings as follows:

<body class="data-monthly-visitors="{{@custom.monthly_visitors_count}}" data-azure-blob-sas="{{@custom.azure_blob_sas_uri}}">

Looking a little deeper at the JavaScript code, it follows a strict priority system:

- Azure Blob Storage.

- Static value from Ghost theme setting.

- Fallback to default value (5K+)

This approach allowed me to generate a SAS URI string with limited read access to the file. The endpoints are publicly available, but so is the data being displayed on the site. People can't write any data to the Azure Storage Account container. In the Ghost admin panel under "Design & branding", the setting will be visible to add the SAS URI.

Summary

In this blog post, you have learned how you can use a Google Service Account for authentication instead of API keys. This account can safely retrieve data through an Azure Automation Account and send it to Azure Blob Storage. The website can access this data using a SAS URI string and display it on your website.

It's debatable if this method is cost-efficient, even though I have chosen tiers that are either free or with minimal settings.